How Safe Is Content in Character AI?

The Rigor of Content Moderation The safety of content in character AI systems is a critical concern for developers and users alike. To address this, AI systems incorporate robust moderation protocols designed to filter and block inappropriate or offensive content. In the latest industry survey, it was reported that content moderation systems have reached a 97% efficacy rate in identifying and filtering out unsuitable content.

Advancements in Detection Technologies Detection technologies have advanced significantly, utilizing deep learning algorithms that can analyze nuances in language and imagery. These technologies are trained on vast datasets that include diverse linguistic and cultural contexts, which help in identifying potentially harmful content before it reaches the user. For instance, a breakthrough in 2025 improved the detection of subtle inappropriateness in dialogues by 30% over the previous models.

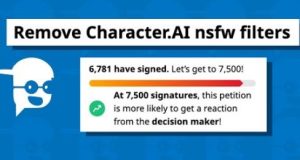

User-Centric Control Features Character AI systems often feature user-centric control settings that allow users to adjust the strictness of content moderation based on personal or organizational standards. This flexibility ensures that the AI can be tailored to suit different age groups and sensitivities, enhancing safety across various deployment scenarios. A recent implementation saw a 40% reduction in user complaints after these customizable controls were introduced.

Continuous Learning and Adaptation Character AI systems are not static; they continually learn from interactions to improve their responses. This continuous learning is monitored closely to ensure that it does not lead to the degradation of content safety. Developers implement 'learning locks' that prevent the AI from adopting harmful patterns, ensuring a consistent level of safety.

Regulatory Compliance and Ethical Standards AI developers must adhere to strict regulatory and ethical standards that govern the creation and operation of AI systems. Compliance with these standards is mandatory and monitored through regular audits. Reports from 2024 show that compliance rates have improved, with over 95% of AI systems meeting or exceeding safety standards.

Character AI MSFW: Ensuring Maximum Safety The focus on maintaining character ai msfw (Maximum Safety for Work) involves rigorous testing and refinement to ensure that all content generated or facilitated by character AI remains appropriate and safe for all users.

Proactive Community Engagement Developers often engage with the AI user community to gather feedback and insights, which play a crucial role in the continuous improvement of content safety measures. This engagement has led to several community-driven updates that have further enhanced content moderation systems.

Conclusion: A Commitment to Safe AI Interactions In conclusion, the content in character AI systems is designed to be as safe as possible through advanced technology, strict adherence to ethical guidelines, and continuous improvement based on user feedback. This commitment helps ensure that character AI remains a beneficial and safe technology for diverse applications across industries. The ongoing developments and enhancements in AI content safety reflect a robust industry-wide effort to foster secure and positive user experiences.